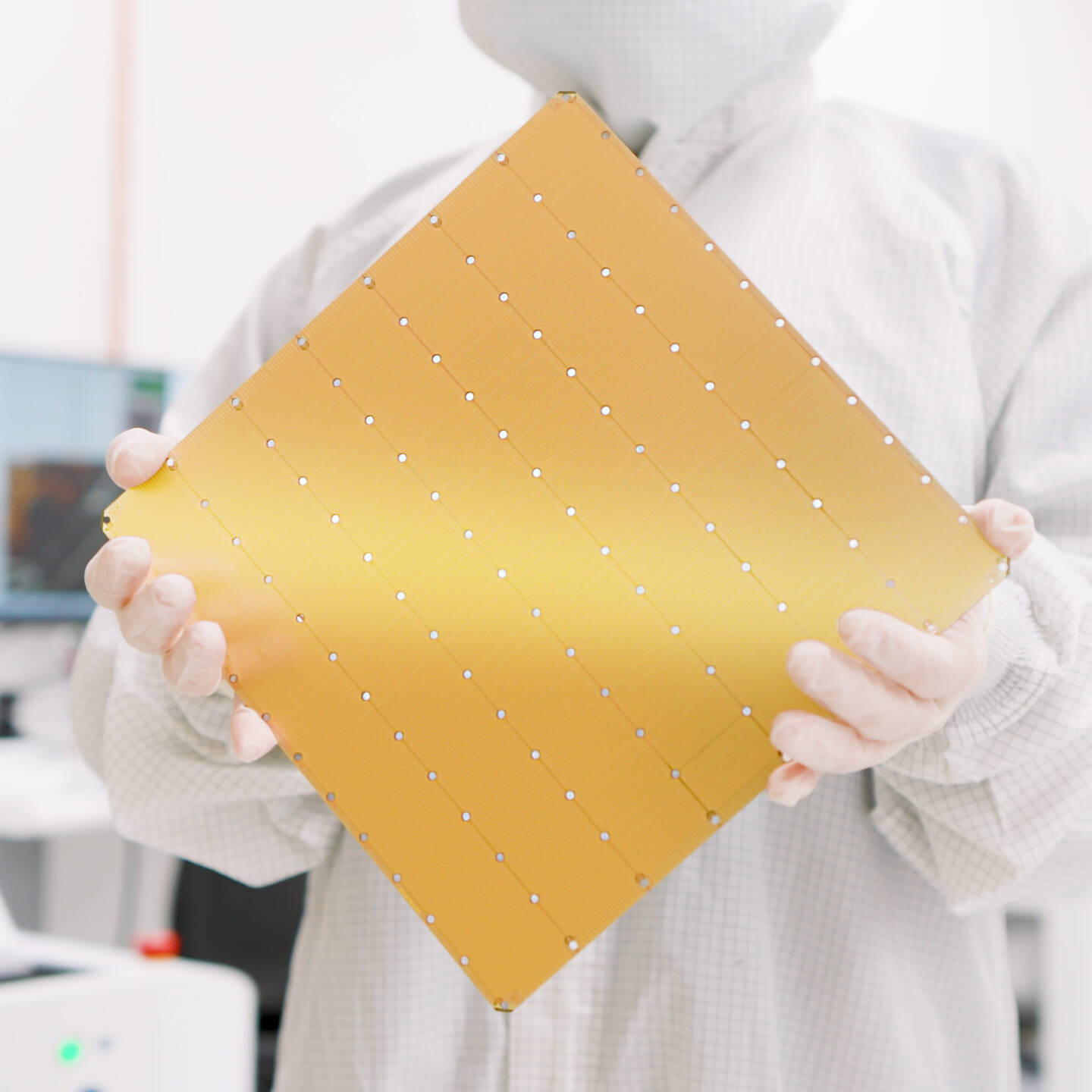

Cerebra Systems designed the biggest computer chip in the world, and it uses whole silicon wafers instead of cutting them up into smaller chips. Naturally, the chip is called the Wafer Scale Engine (WSE), and its third generation was recently released. The WSE-3 is the same physical size as previous generations, and it is 57 times larger than NVIDIA's current H100 AI GPU. That is a 22 cm square chip containing more than 4 trillion transistors, which are grouped into 900,000 cores and a memory of 44 GB. This combination can perform 125 petaFLOPS of AI compute, which is twice as powerful as the previous WSE-2 chip at the same power consumption.

The WSE-3 comes in a system called the CS-3, which can be clustered to increase compute power. The maximum cluster size has been increased to 2,048 CS-3s, providing up to 256 exaFLOPS of float16 compute. Cerebra claims that it has the ability to train models that are 10 times larger than those of GPT-4 and Google Gemini. According to experts, a single logical memory space can accommodate 24 trillion parameter models without any partitioning or refactoring. This implies that training a 1 trillion parameter model on the CS-3 is as simple as training a 1 billion parameter model on GPUs.